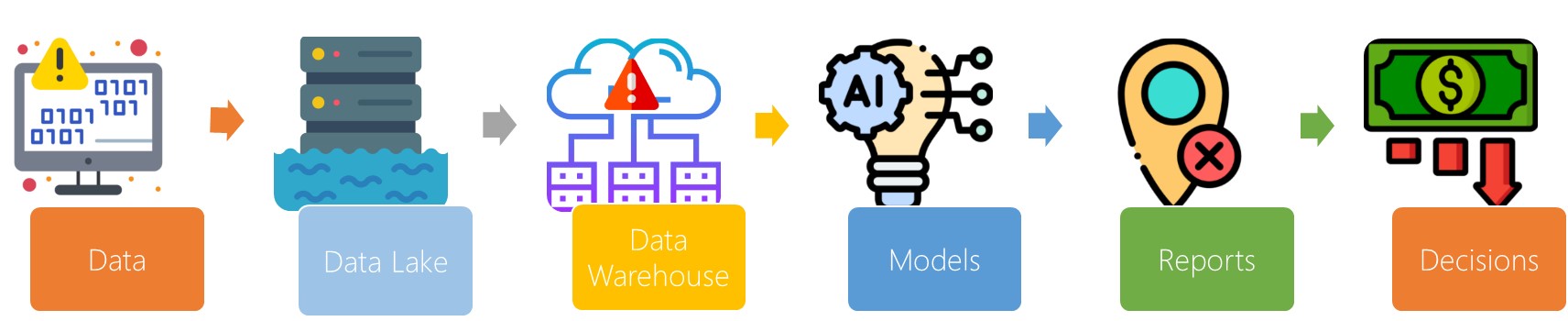

Data observability can be defined as the level of awareness an enterprise has of its data platform. Without this awareness, downstream data teams can’t trace problems upstream and can’t improve their processes. Different levels of data observability are defined by the metadata collected. Data observability is a key component of big data analytics.

Data ingested quality

Data ingested quality and observationability monitoring are critical components of data management systems. Monitoring the quality of ingested data helps ensure that the data management system is working properly and that new errors are not introduced to the data. Together, they create a data management system that provides better results.

Data ingestion is the process of acquiring data from a variety of sources and transforming and loading it. Data ingestion is often a challenging process due to its fragmentation, and quality is crucial. Data ingestion should be optimized to minimize the number of errors and improve the usability of data.

The best way to start data management is through observability. Observability platforms help close the gap between data ingestion pipeline tools and data quality monitoring. Observability allows you to act on the data as it is ingested, which prevents bad data from entering your system.

Data ingested consistency

Data ingestion is a complex process that can result in performance issues. It requires the proper assessment of the data’s format and structure. Moreover, it must also ensure the consistency of the data from different sources. Moreover, it can also be affected by security breaches. In such a scenario, it is crucial to use a data-ingestion solution that is designed to ensure the integrity of the data.

Observability is the ability to identify underlying problems in data. It is essential to monitor the data quality during ingestion and ensure that it doesn’t degrade the system’s performance over time. Ingestion monitoring identifies and fixes errors as it enters the data system. It also ensures that the system does not introduce new errors into the data.

Data ingestion can be real-time or batch. Real-time ingestion is the process of collecting, processing, and integrating data in real-time. It allows a data scientist to monitor and analyze changes as they occur. It is particularly useful for data sources that continuously emit data. It also allows for low-latency analytics.

Data ingested errors

Data Observability encompasses activities and technologies that help you understand the health of your data. This practice has become a natural evolution of the DataOps movement. It is the missing piece to the puzzle of agile data product development. Observability is a process by which data products are monitored and can be remedied as problems arise.

Data observability provides actionable insights about your data quality and system. It identifies bad data system problems before they get into the system. Observability helps you determine where and when to make improvements. Data quality is crucial for organizations of all sizes and types, and the consequences of bad data can be enormous.

Observability is essential for preventing business-impacting errors. However, observability requires a high level of resource-constrained monitoring. In addition, observability requires an understanding of topology and context. The first step to building a robust observability framework is to monitor datasets and operational processes. This gives you big-picture context of your data platform and helps you identify root causes of errors.